Job DSL Part II

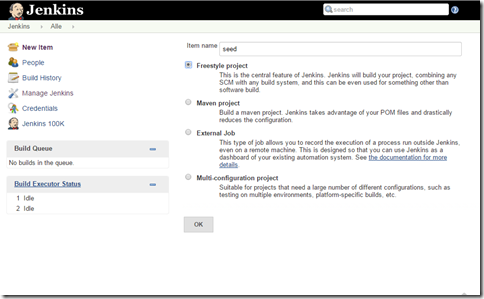

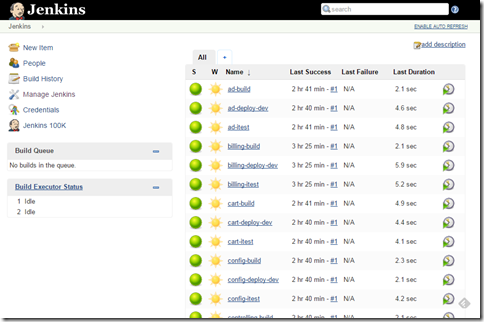

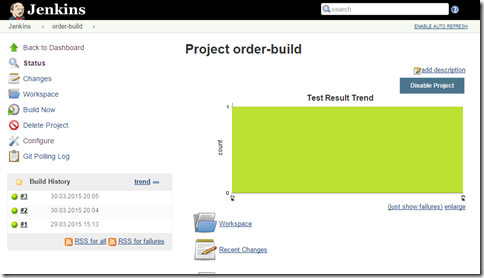

In the first part of this little series I was talking about some of the difficulties you have to tackle when dealing with microservices, and how the Job DSL Plugin can help you to automate the creation of Jenkins jobs. In today’s installment I will show you some of the benefits in maintenance. Also we will automate the job creation itself, and create some views. Let’s recap what we got so far. We have created our own DSL to describe the microservices. Our build Groovy script iterates over the microservices, and creates a build job for each using the Job DSL. So what if we want to alter our existing jobs? Just give it a try: we’d like to have JUnit test reports in our jobs. All we have to do, is to extend our job DSL a little bit by adding a JUnit publisher: freeStyleJob("${name}-build") { ... steps { maven { mavenInstallation('3.1.1') goals('clean install') } } publishers { archiveJunit('/ta